The following is my response to a post on r/theydidthemath. OP asks how fast the human brain would be compared to modern computers, if we somehow turned it into one. Here was my response:

The human brain is estimated to have around 86 billion neurons and 150 trillion synapses. If we consider each synapse as a binary switch, that would give us around 150 trillion bits of memory. In terms of processing speed, each neuron can fire around 200 times per second. If we consider each neuron as a simple processor that can perform one operation per firing, that would give us around 17,200 trillion operations per second or 17.2 petaflops.

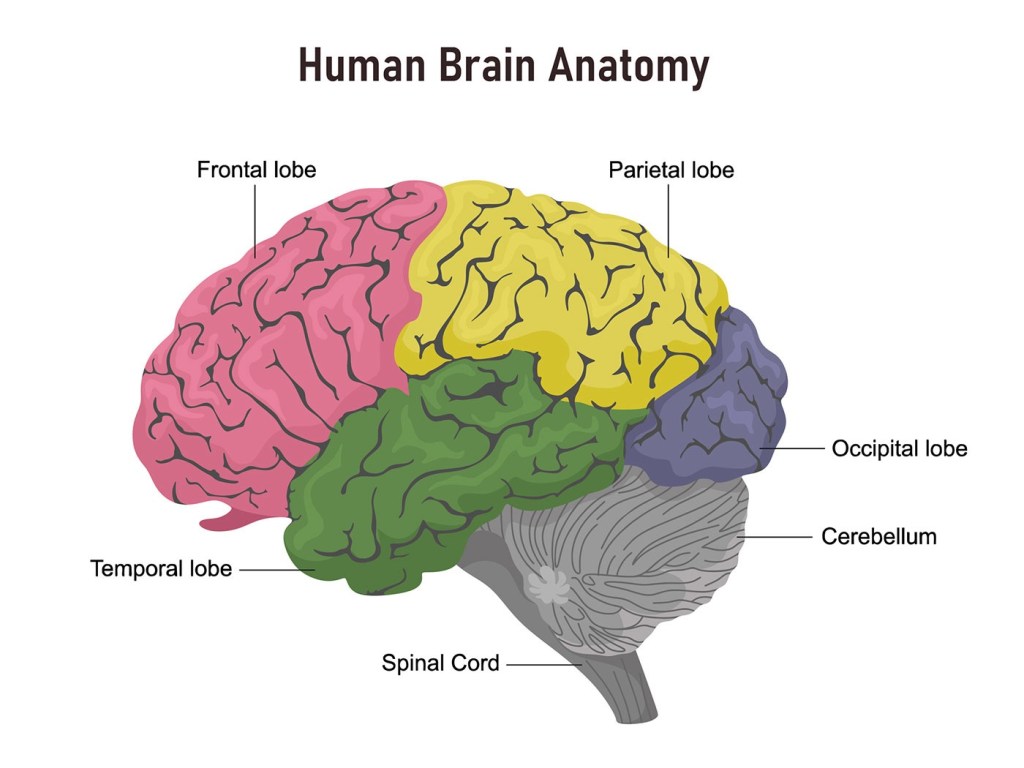

However, neurons don’t just fire in a binary on/off way. They have complex, nonlinear activation functions that depend on the sum of all the inputs they receive. Moreover, they don’t just fire once – they can fire in rapid bursts or in rhythmic patterns. So, each neuron is kind of like a little analog computer doing its own complex calculations.

Then you have the synapses, which aren’t even static connections. They’re constantly changing their strength based on the activity of the neurons they connect. This is called synaptic plasticity, and it’s the basis of learning and memory in the brain. So, the brain’s “software” is constantly rewriting itself as it learns and adapts.

All of this is happening in a massively parallel way, with billions of neurons doing their own thing and interacting with each other in complex feedback loops. It’s a level of parallelism that makes even the most advanced supercomputers look like toys.

Plus, the brain doesn’t just crunch numbers – it perceives, imagines, dreams, and learns in ways we don’t fully understand. It can handle ambiguity and learn from few examples in ways even advanced AI struggles with. So, while we can estimate the brain’s raw computational power, it’s the unique architecture and algorithms of cognition that really set it apart.

Despite all this complexity, the brain is incredibly energy efficient. It only uses about 20 watts of power, which is less than a lightbulb. Compare that to supercomputers that use megawatts of power and require huge cooling systems.

So, how does the brain do it? Well, we don’t fully know. But one key seems to be its use of sparse, distributed representations. Instead of having a single neuron represent a concept, the brain spreads it out over a large population of neurons in a way that’s fault-tolerant and noise-resistant.

In terms of simplistic measures of speed and memory, the brain might be in petaflop supercomputer territory. But it’s doing a vastly different type of computation that’s much harder to compare apples-to-apples. The brain’s complexity is less about raw numbers and more about some secret sauce of cognition we haven’t fully figured out how to replicate in silico.

Leave a comment